In response to Cineplex Digital Media’s digital billboards performing facial detection, we submitted the following letter.

See PDF version here.

Thursday, December 4, 2025

Dear Chief Privacy Officer at Cineplex Digital Media:

We are writing to you with regards to privacy concerns surrounding your usage of Anonymous Video Analytics (AVA) in digital signage near Union Station Bus Terminal (USBT) and elsewhere in Canada. We represent both technologists and regular Canadians who see the value in innovation and technological development for public good.

In 2020, the Office of the Privacy Commissioner of Canada (OPC), as well as the privacy commissioners for Alberta and British Columbia, released a report on a case involving use of AVA technology by Cadillac Fairview (CF) in 2018.1 The report found that CF’s AVA deployment constituted a violation of privacy, and that “express opt-in consent would be required, as they determined that some of the information involved was sensitive and its surreptitious collection in this context would be outside the reasonable expectations of consumers.”2

We have a number of concerns relating to the aforementioned report and would like some clarifications.

Concern 1: How has the joint investigation conducted in 2020 by the Offices of the Privacy Commissioner of Canada, Alberta and British Columbia affected CDM’s deployment of AVA technology?

Concern 2: A Toronto Star article by Kevin Jiang also mentions that CDM has consulted with the OPC for this project.3 How has this consultation mitigated some of the privacy issues that have arisen in the use of AVA?

We also have concerns with certain statements in the privacy notice affixed to AVA-enabled digital signage by Cineplex (CDM).4

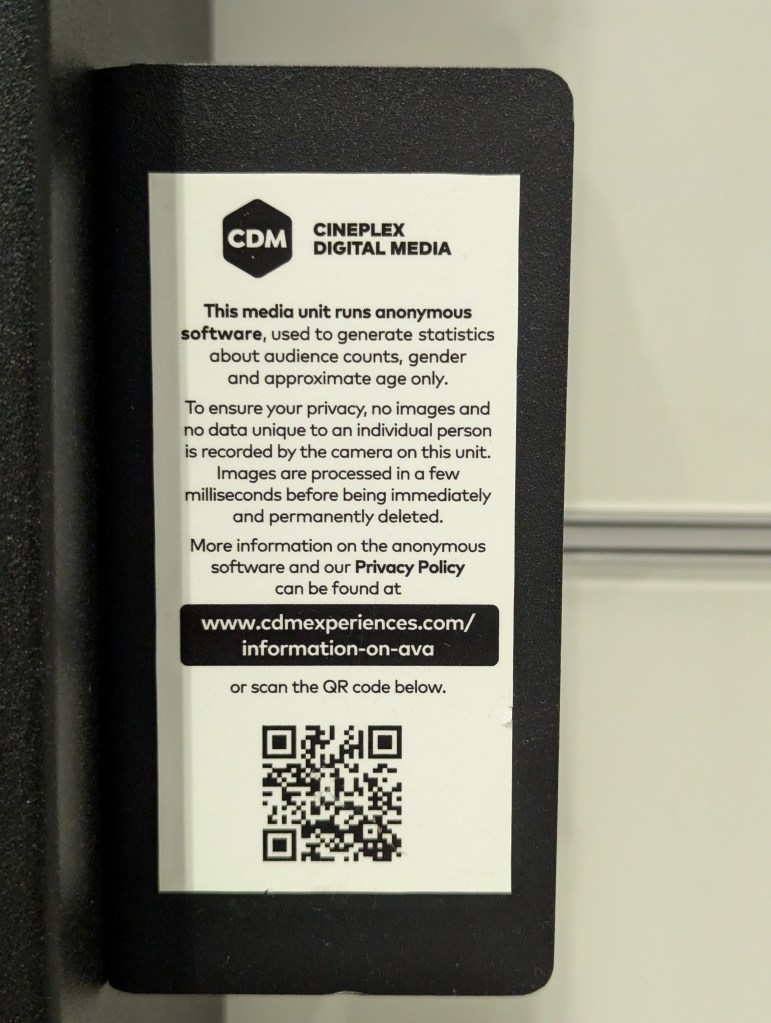

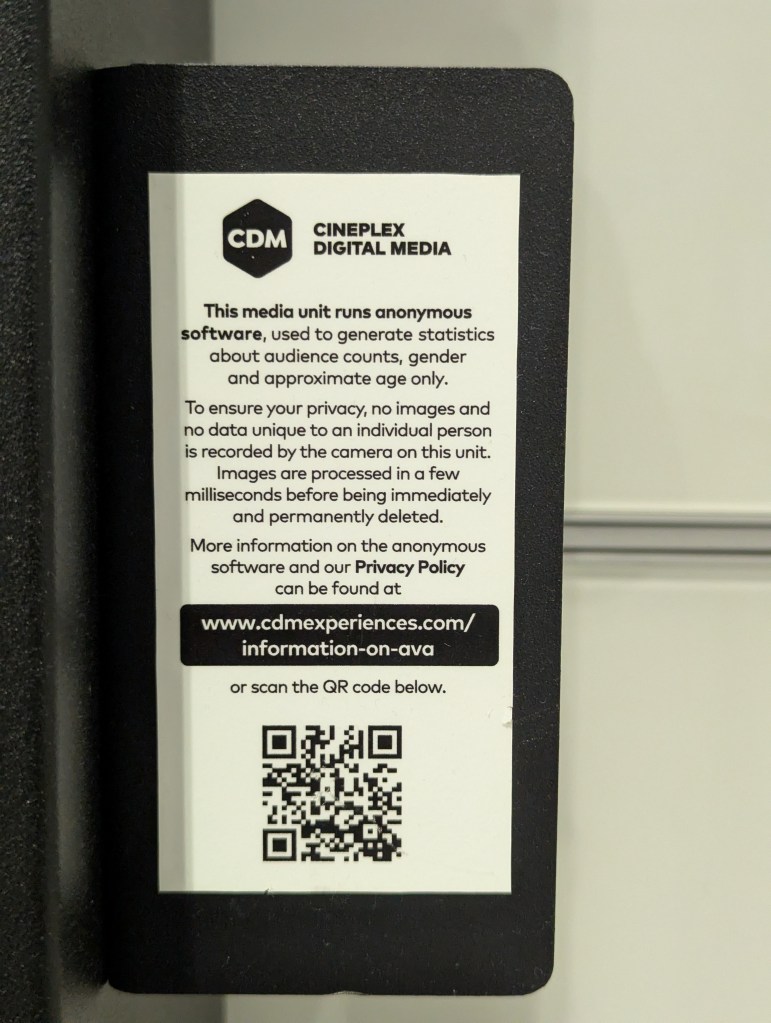

| Statement 1: This media unit runs anonymous software, used to generate statistics about audience counts, gender and approximate age only. |

Concern 3: What is the intended meaning of the phrase “anonymous software” per Statement 1 (also see Figure 1 in the Appendix)?

| Statement 2: Images are processed in a few milliseconds before being immediately and permanently deleted. |

Concern 4: If images are processed in the matter of milliseconds before being permanently deleted, this implies that images are only processed on-device, as opposed to being sent to a remote server or cloud service. Are images processed on-device?

Concern 5: Is any personally identifying information or biometric data collected, inferred, extracted or stored beyond statistics on audience counts, gender and approximate age?

Concern 6: Does the data collected by AVA reside in Canada?

Concern 7: What measures are in place to protect data privacy during transfer and storage of data in remote servers or cloud facilities?

We also have a number of concerns with certain statements in your notice of disclosure:5

| Statement 3: We ensure that the public is well informed as [sic] the presence of anonymous video analytics systems by placing signage and stickers on kiosks, at property entrance, exit ways and other places along the path to the property. |

Concern 8: Upon an investigation of Union Station Bus Terminal in early November 2025 by members of Technologists for Democracy, no warning of AVA systems was found except those attached to display signage themselves. This runs contrary to the above statement.

| Statement 4: Camera sensors are installed in plain sight and are never hidden. We want the public to understand exactly where they are placed so, if they chose, they can avoid it. |

Concern 9: Cameras attached to Cineplex’s digital signage are visible but quite small and difficult to identify. Over 90% of the general public interviewed by members of Technologists for Democracy were not previously aware of cameras attached to said signage. Areas where the digital ads are currently placed adjacent to USBT are unavoidable for individuals passing by in hallways coming from Union Station (i.e., two digital ads are placed very close to a train timetable, while the other is facing an entrance to USBT itself). This and concern 8 run contrary to the statements that cameras are “in plain sight”, “never hidden” and “if they [the public] chose, they can avoid it.”

We also have a number of concerns with certain statements in your privacy policy:6

| Statement 5: Other Uses: We may also use Personal Information, where necessary, for: establishing, maintaining and/or fulfilling relationships with our business partners, third-party vendors of products and/or services, as well as our corporate and business customers; |

Concern 10: Are images of individuals sold to any third parties?

Concern 11: Are statistics on audience counts, gender and approximate age sold to any third parties?

Concern 12: Are any other data collected by AVA sold to third parties?

| Statement 6: Cineplex may also share Personal Information necessary to meet legal, audit, regulatory, insurance, security or other similar requirements. For instance, Cineplex may be compelled to disclose Personal Information in response to a law, regulation, court order, subpoena, valid demand, search warrant, government investigation or other legally valid request or enquiry. We may also share information with our accountants, auditors, agents and lawyers in connection with the enforcement or protection of our legal rights. |

Concern 13: If data collected by AVA systems or digital signage is requested and sent to third parties for legally valid requests or enquiries, is there a mechanism for informing the public?

Concern 14: If data collected by AVA systems or digital signage is requested and sent to third parties for legally valid requests or enquiries, is there a mechanism for third-party audits of such data requests or enquiries?

Concern 15: It has been reported that CDM is being sold to US-based Creative Realities,7 who will take over ad billboards spanning malls and office buildings. Per this deal, will Creative Realities also take ownership of AVA systems?

We request that Cineplex Digital Media provide clarity on:

- How you are ensuring that individuals captured by AVA systems remain anonymous.

- How you are ensuring individuals are properly informed of recording and facial detection performed on premises.

- Your data policy and transparency around sharing of information to third parties.

- How your AVA system differs from Cadillac Fairview’s AVA system involved in the joint investigation conducted in 2020 by the Offices of the Privacy Commissioner of Canada, Alberta and British Columbia.

Innovation does not have to come at the cost of personal privacy. We are concerned with the lack of clarity surrounding the privacy notice and the privacy statements in CDM’s website. We are also alarmed at the prospect of a foreign company taking over CDM’s ad billboards to service software that may operate outside of Canadian laws and regulations.

We would greatly appreciate a response within 10 business days. Please reply to this email or reach out directly to Khasir Hean at khasir.hean@gmail.com / 226-927-2677 if you have any questions or would like to discuss details further.

Best,

Adam Motaouakkil

[email redacted for privacy]

Jitka Bartosova

[email redacted for privacy]

Khasir Hean

khasir.hean@gmail.com

Technologists for Democracy

techfordemocracy.ca

Signing organizations:

Canadian Tech for Good

Nikita Desai

[email redacted for privacy]

More Transit Southern Ontario (MTSO)

Jonathan Lee How Cheong

[email redacted for privacy]

https://www.moretransit.ca/

OpenMedia

Matt Hatfield

[email redacted for privacy]

https://openmedia.org/

Tech Workers Coalition Canada

Jenny Zhang

[email redacted for privacy]

https://techworkerscoalition.org/canada/

TTCriders

Andrew Pulsifer

[email redacted for privacy]

https://www.ttcriders.ca/

Signing individuals:

[names and emails of 25 individuals redacted for privacy]

Appendix

- Joint investigation of the Cadillac Fairview Corporation Limited by the Privacy Commissioner of Canada, the Information and Privacy Commissioner of Alberta, and the Information and Privacy Commissioner for British Columbia. October 28, 2020. https://www.priv.gc.ca/en/opc-actions-and-decisions/investigations/investigations-into-businesses/2020/pipeda-2020-004/ ↩︎

- Anonymous video analytics’ future uncertain after Canadian privacy regulators’ investigation. November 4, 2020. https://www.blg.com/en/insights/2020/11/anonymous-video-analytics-future-uncertain-after-canadian-priv acy-regulator-investigation ↩︎

- Jiang, Kevin. These ads near Union Station and other places around Toronto could be recording you. What you need to know. November 5, 2025. https://www.thestar.com/news/gta/these-ads-near-union-station-and-other-places-around-toronto-could-be-recording-you-what/article_7af7c920-1ce7-4b19-98db-4c22d742f202.html ↩︎

- See Appendix for the privacy notice in question (Figure 1). ↩︎

- Information on AVA | CDM. https://www.cdmexperiences.com/information-on-ava ↩︎

- Privacy Policy | CDM. Effective date April 10, 2024. https://www.cdmexperiences.com/privacy-policy ↩︎

- Deschamps, Tara. Cineplex selling digital signage unit to U.S. company Creative Realities for $70M. October 16, 2025. https://toronto.citynews.ca/2025/10/16/cineplex-digital-media-sale-signage/ ↩︎